It’s often uncanny how monsters, aliens, and animals in films and video games have strikingly human facial features and expressions.

In the past, the job of rendering realistic animated faces was painstaking at best. Now, recent advances in animation greatly reduce the time involved in creating characters.

“In entertainment applications such as movies and games, human face models often must be created by artists rather than using an automated method. For example, many characters in animated movies and games are imaginary, so they cannot be scanned. Thus, there is need for a facial design method that is simultaneously simple, understandable, and powerful for artists. We present a system that elegantly addresses this need,” write Seung-Hyun Yoon, John Lewis, and Taehyun Rhee, authors of “Blending Face Details: Synthesizing a Face Using Multiscale Face Models,” (login required for full text) which appears in the November/December 2017 issue of IEEE Computer Graphics and Applications.

How the process begins

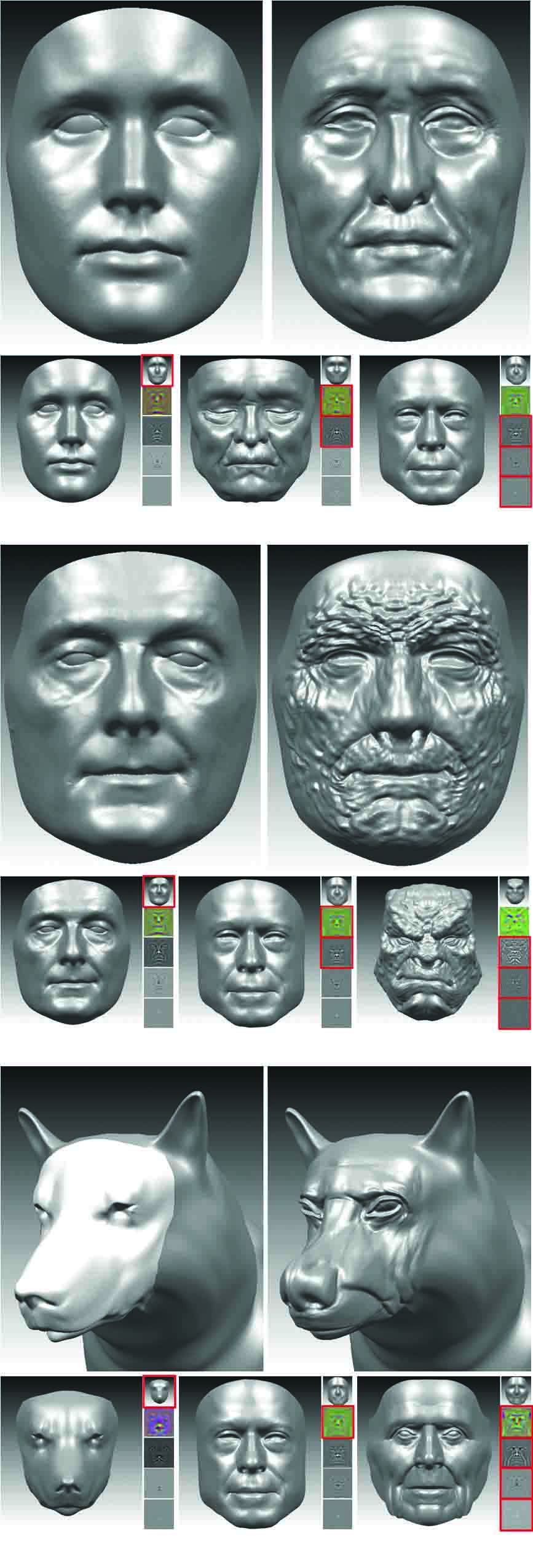

Using this system, animation artists can take the features of a human face and apply them to a monster or animal.

A big part of the challenge is grabbing certain features from the human face and transporting them to the animated character’s face.

A big part of the challenge is grabbing certain features from the human face and transporting them to the animated character’s face.

“A method that leverages existing face models can save much effort. Shape blending is an especially simple, but powerful, approach that is prevalent in facial animation for generating arbitrary expressions,” say the authors.

Other researchers “utilized shape blending as an alternative tool for synthesizing a new face model from existing faces. They segment meaningful face regions (such as the eyes, nose, and mouth) from different faces and spatially assemble them into a new face. Their method requires vertex-wise correspondence across each face and its blendshapes,” they added.

What makes this new system unique?

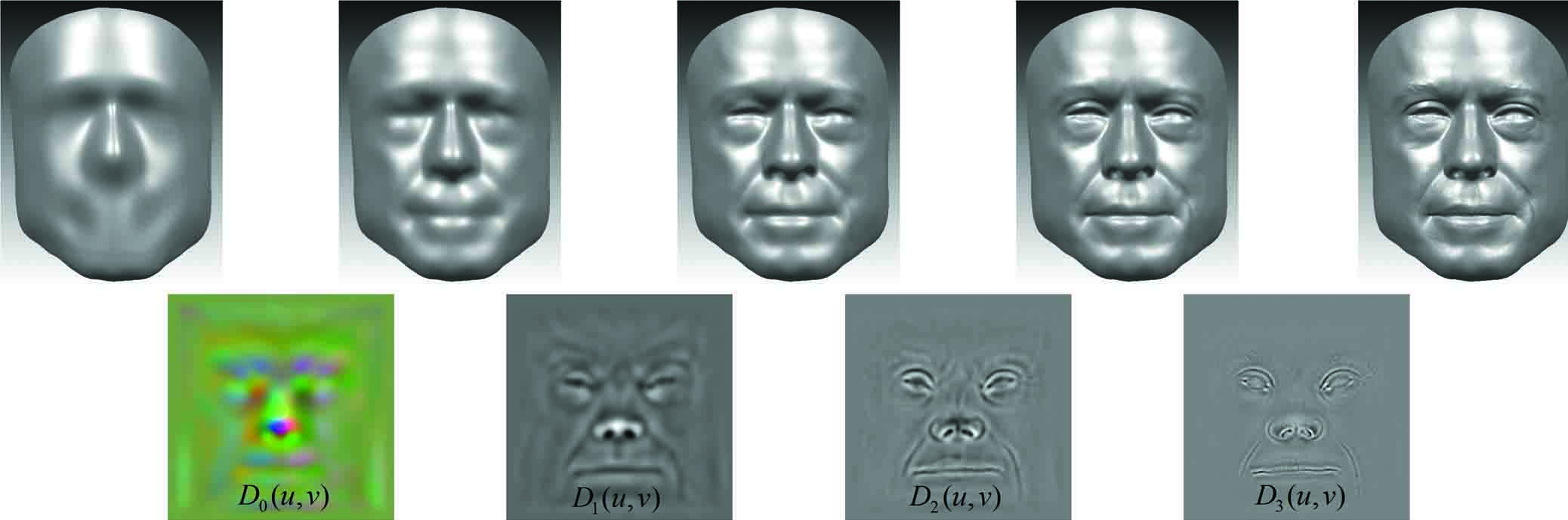

“We present a novel shape blending scheme to synthesize a new face model using weighted blending of spatial details from various face models. Our multiscale face models are fully corresponded in a common parameter space, where the artist can interactively define semantic correspondences across faces and scales,” say the authors.

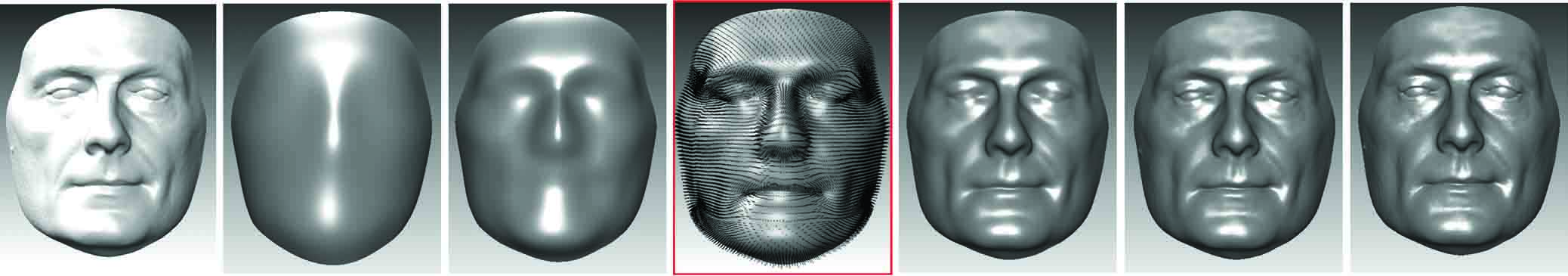

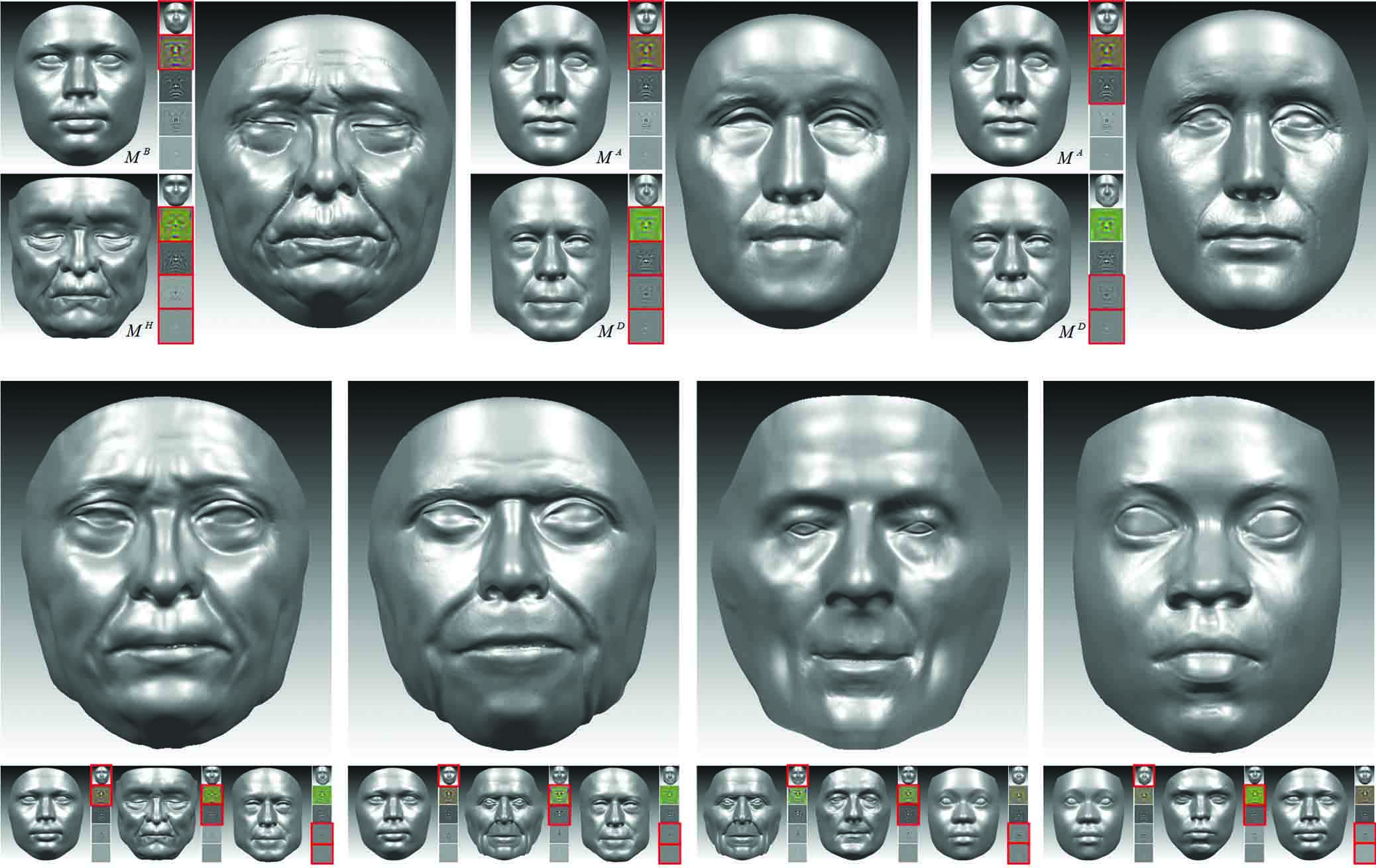

Figures 2 through 7 below show the results of approximation to the 1st face model at different levels.

Getting the details

Artists use a multiscale face model setup that provides more flexibility for fine tuning by using the scalar maps.

Creating curves

“By constructing an efficient data structure such as a quadtree for the parameterization, we can interactively visualize the 3D curves on the 3D face model corresponding to the 2D feature curves in parameter space. This provides intuitive user controls to edit 3D curves in the 2D parameter space,” say the authors.

Working with 15 models

The system can interactively blend multiscale face models without additional hardware acceleration.

“Because we used 15 faces consisting of a base surface and four-scale CDMs, we have a 15 + 15 × 4 dimensional blendshape space; the total number of blendshapes can be limited by the artist,” say the authors.

Here comes the merging

The four larger faces toward the bottom show examples of synthesized face models using weighted blending of three multiscale face models.

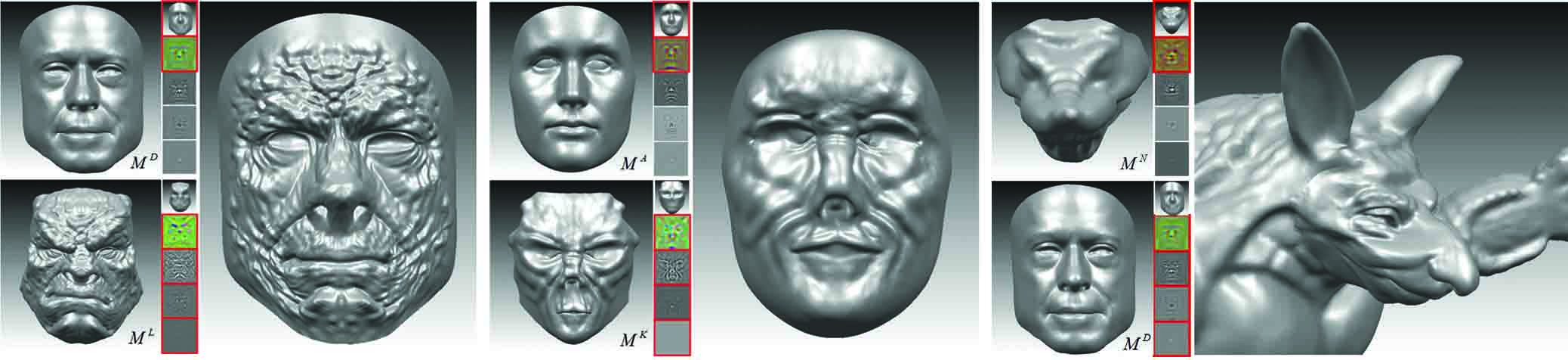

Blending multiscale nonhuman faces

The designers transfer the details of a monster face to a human face, an alien face to a human face, and human faces to an armadillo face.

“Our method is not restricted to human faces. Transferring details between sculpted nonhuman creatures and a human actor’s face is an important task in visual effects,” the authors say.

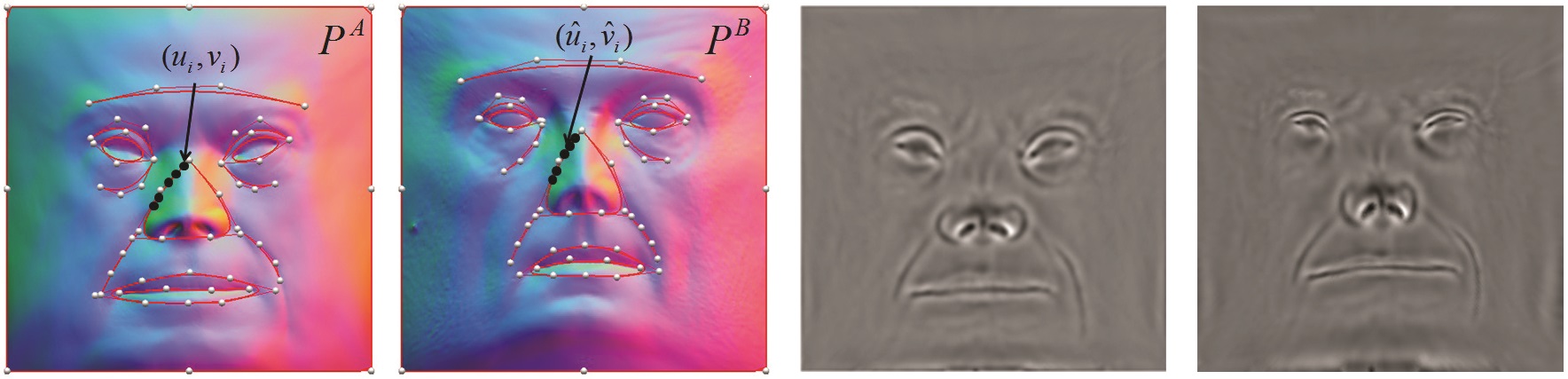

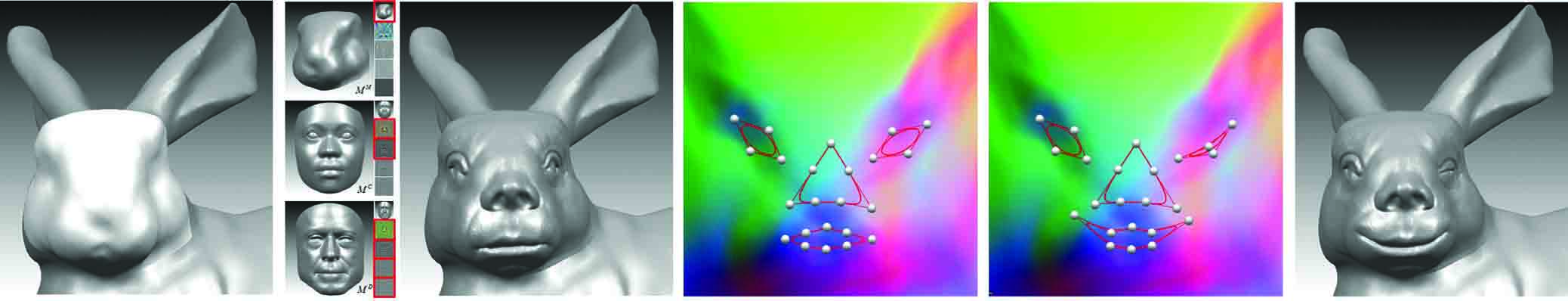

A bunny with a human face

Even a rabbit is anthropomorphized.

This bunny model shows details transferred from a human multiscale face models, or MFMs.

Let’s take it step by step.

First, or image (a), is a Stanford bunny model, commonly used in computer graphics. Then in (b), artists transferred details from the face models. Here are (c) the semantic meaning of the ambiguous face and the (d) intuitive user interface on the 2D parameter space.

Then, ta-da! Here’s the facial animation result, in the last image, (e).

“The multiscale face blending together with feature curve controls in the parameter space provides a practical solution for transferring face details to an abstract face. For example, face regions such as the eyes, nose, and mouth on the Stanford bunny model are somewhat ambiguous,” the authors write.

How well did the system work?

The authors conducted a survey of 10 animation artists who were given 10 minutes to perform a series of tasks. Afterward, they were asked whether or not they could synthesize a new face and whether or not the new modeling method was easy and intuitive in doing so.

On a scale of 1 to 5, the average score for both questions was 4.

Ultimately, their goal is to make the job of animation artists easier. And it seems the researchers are well on their way to achieving it.

“To interactively handle ambiguous and nonhuman faces, we focus on artist controls rather than formulating an optimization problem that might run slowly and fail occasionally. However, our method does not prohibit adding such a step. Trade-offs between interactive semantic controls and automated optimization would be a good discussion point. A solution to satisfy both targets would be ideal and is left to future study,” they say.

Research related to computer animation in the Computer Society Digital Library

Login may be required for full text.

About Lori Cameron

Lori Cameron is a Senior Writer for the IEEE Computer Society and currently writes regular features for Computer magazine, Computing Edge, and the Computing Now and Magazine Roundup websites. Contact her at l.cameron@computer.org. Follow her on LinkedIn.