Current hiring trends indicate that your next job interview could very well be with a robot.

While employers may like the automation, job hunters’ reactions may depend on whether or not they get the job—and the salary they deserve.

In addition to rifling through resumes, AI bots are now doing the interviewing too. Their surprising success has researchers racing to build the best robot possible—even cobbling together a model from several other artificial intelligence systems.

Engineers from Estonia and Spain studied the AI models of several other research groups—all of whom participated in the 2017 ChaLearn Looking at People (LAP) CVPR/IJCNN Competition—and extracted their best features to create a new AI interviewer with almost 90 percent accuracy in determining a job applicant’s personality traits.

What sets their study apart from other is the vast data they culled from prior models, the researchers said.

The AI screening manager is so sophisticated that it will read your facial features, voice intonation, and word choices. Then it rates your personality on the Big Five Personality Traits scale: agreeableness, extroversion, neuroticism, openness, and conscientiousness.

Sound unnerving?

Employers love it because machine cuts the grunt work and assembles a list of qualified candidates in short order.

But the jury is still out for job hunters who feel they might not get a fair shake from a robot. It’s not just how dehumanizing the robotic interview seems. People in conversation feed off each other’s responses. That doesn’t happen with a robot. Applicants get no feedback after a rejection either, no idea how to improve.

They get the equivalent of a virtual stone wall.

On the other hand, applicants will often conduct the interview at their leisure on a computer from the comfort of their own home. They can also find out quickly if they have been eliminated from the pool so they can move on to the next opportunity.

While it remains to be seen how much criticism the robotic interviews will receive, it seems automation is here to stay. And it will combine old-fashioned first impressions with a high tech analysis of who that robot really thinks you are.

“Impression plays a major role in personality analysis,” the researchers said. They cited one recent study that “found that 7 percent of a person’s impression depends on the spoken word, 38 percent on vocal utterances, and 55 percent on facial expressions.”

The above photo “shows example video frames from the database with extreme positive and negative values for each of the six labels, illustrates the importance of the visual aspect of impressions in job interview invitations as well as personality analysis,” say the authors of “Integrating Vision and Language for First-Impression Personality Analysis.”

“However, in automatic personality analysis it is important to look beyond the theoretically higher influence rate of facial expressions on likeability. For example, negative speech could reverse the positive impression of a person who looks friendly and projects a nice visual image,” the researchers say. They are Jelena Gorbova, Egils Avots, Iiris Lüsi, Mark Fishel, and Gholamreza Anbarjafari, all of the University of Tartu, Estonia; and Sergio Escalera of the University of Barcelona and the Computer Vision Center.

In short, the words we use and our micro-expressions will be examined by the unflinching eye of artificial intelligence. It’s a whole new ball game. So, buckle up.

Welcome to ‘automatic personality analysis’

Right now, nearly all Fortune 500 companies use some sort of automation in their hiring process. Current systems can blow through tens of thousands of applications per day compared to several dozen on a good day for a human hiring manager.

They can also shave weeks off the more labor-intensive screening tasks—reading resumes, administering tests, analyzing responses to interview questions, and assessing personality traits.

“First impressions influence many everyday judgments—for example, in choosing a job candidate with the right professional skills. These skills can be assessed with a set of test assignments, but because it is time-consuming and requires considerable resources to interview each candidate in person, researchers have explored automatic personality analysis using different input data, such as human speech,” the authors say.

How the AI interviewer works

The interviewer model developed by the authors is simply a video processing method that assesses the personalities of job candidates to see if they are a match for a certain position.

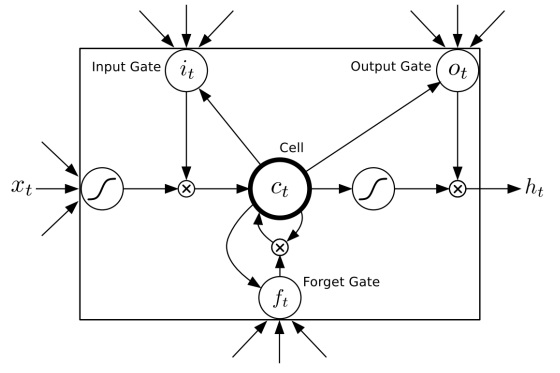

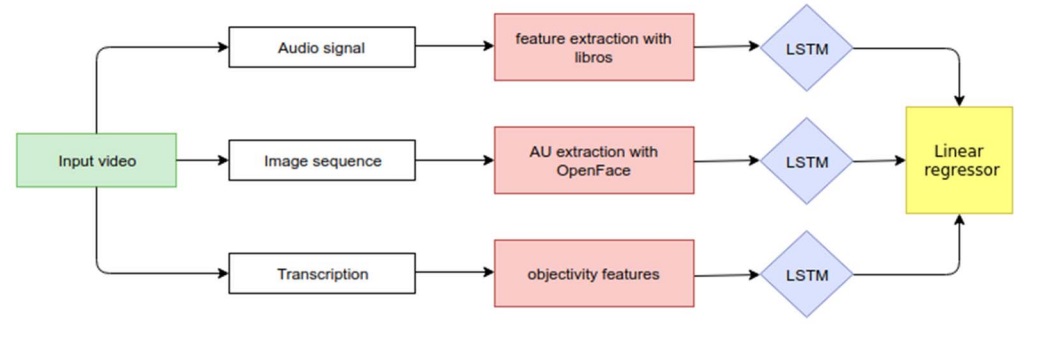

The model extracts candidate data from three sources—audio, image sequences, and spoken text—and processes it with the help of a long short-term memory cell-based network (LSTM).

The algorithm—which uses the expertise of psychologists, data scientists, and software engineers—then plows through an enormous database of video clips to fine-tune its prediction capabilities through deep learning.

Crowdsourcing personality traits with 10,000 videos

For their model, the authors used a dataset containing a whopping 10,000 video clips, and asked users on the crowdsourcing platform Amazon Mechanical Turk (AMT) to evaluate it.

“Our system estimates a person’s Big Five Personality Trait scores using visual (frames extracted from video), paralinguistic, and lexical features. Its performance significantly improves with a large set of different personality aspects. Exceptional cases highlight the importance of multi-modal processing for this task,” say the authors.

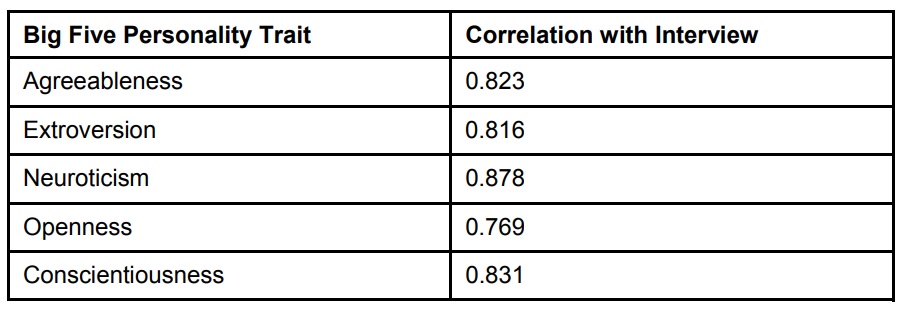

The AMT crowd was asked to rate on a scale of 0 to 6 all five personality traits and correlate the scores with a separate “interview” rating to determine if subjects in the videos should be invited back for another interview.

The correlation results can be seen in the table below.

The model developed by the authors analyzes facial expressions, gestures, vocal characteristics, and language patterns. Provided employers fine-tune their interview questions and job qualifications, the system responds with amazing accuracy—upwards of 89.7 percent.

Block diagram of the researchers’ proposed method, which has a temporal component consisting of extracted audio and video features and a natural language processing component consisting of extracted word samples. These components are processed by three separate long short-term memory (LSTM) neural network cells; the hidden features are further processed by a linear regressor and then fed to an output layer.

“We consider each clip from the database separately as a speech signal, a set of video frames, and a set of words that the person uses when speaking,” say the authors.

Using the Big Five Personality Traits and LSTM to build a composite model

Oliver P. John and Sanjay Srivastava, of the Department of Psychology and Institute of Personality and Social Research at the University of California at Berkeley, are credited with creating the so-called Big Five Personality Traits, which helps psychologists identify someone’s personality type based on their level of agreeableness, extroversion, neuroticism, openness, and conscientiousness.

Using the best features of competitor models, the authors designed their hiring model and then implemented a recurrent neural network called a long short-term memory cell-based network (LSTM) through which data is fed to replicas of the same neural network.

Each time the data is looped through a network replica, the system retains important information from the previous feed and grows smarter with each pass.

Software engineers like it.

“Many researchers have tried different types of recurrent neural networks. One of the most popular structures that has emerged is long short-term memory (LSTM) cell-based networks,” the authors say.

Refining the hiring model through deep learning

The database video frames shown earlier illustrate how positive facial expressions receive naturally higher crowdsourced scores than negative ones. However, if a subject’s positive facial expression is marred by negative speech, the score reverses.

Each layer of accurately-labeled audio, video, and lexical data refines the prediction capabilities of the hiring model.

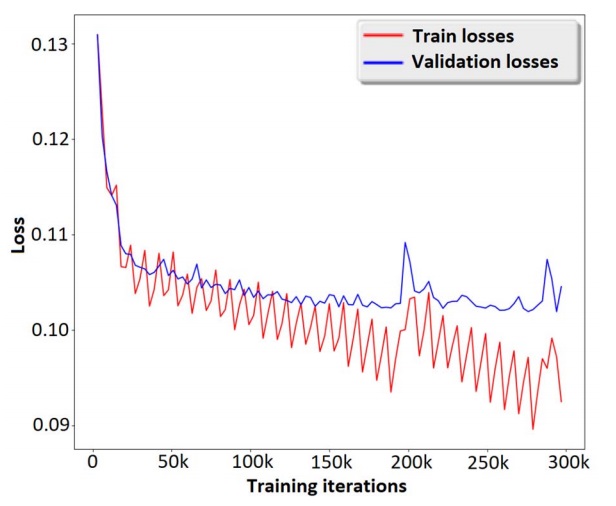

To train and evaluate the model, the authors used TensorFlow, an open source software library that ensures fast learning time—helpful when doing over 300,000 iterations.

As can be seen in the graph below, training and validation losses taper off considerably as test iterations increase.

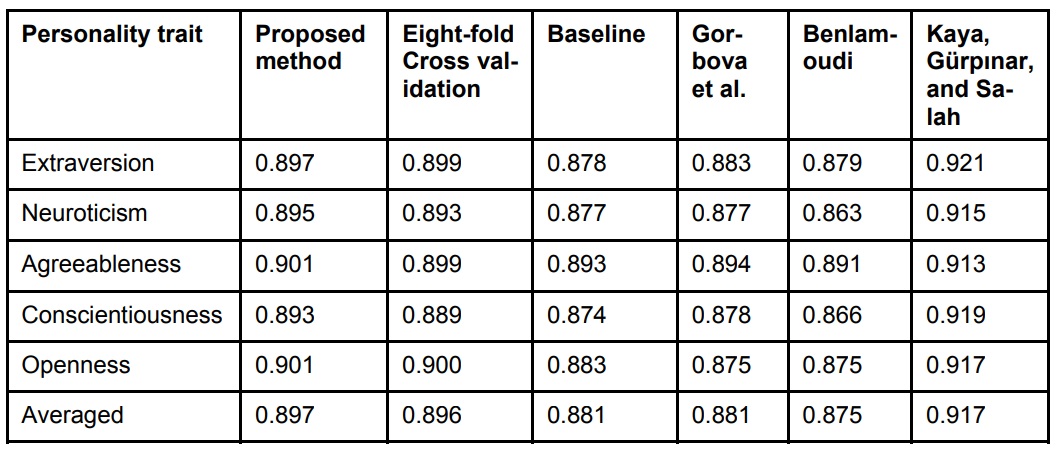

To show that the author’s proposed model achieved stable results, the authors applied eight-fold cross validation on the training and validation datasets—the “eight” referring to the number of groups that the given data sample is split into.

For the final model prediction of the Big Five Personality Traits (column one), the results of the cross validation (column three) as well as how closely they compare to the authors’ proposed method (column two) are illustrated in the table below.

The remaining table data, culled from the ChaLearn competition, show how participants measured up against a simple baseline (column four), and against their competitors (columns five through seven).

The team in column seven won first place with a model that, while effective, was admittedly more complex than the authors’ proposed model. In the end, both teams used the same comparison features and attained similarly high results.

“From obtained video, we extract features from three modalities—audio, image sequences, and spoken text—and process these with the help of an LSTM unit. Our method achieves reasonably accurate performance that can be further improved by tuning the LSTM parameters,” say the authors.

What the future holds

According to the authors, the key to improving their hiring model rests in simply tweaking the long short-term memory cell-based network.

“Our method achieves reasonably accurate performance that can be further improved by tuning the LSTM parameters,” they say.

In the end, the ultimate question is whether a robotic system is capable of revealing what a face-to-face interview can’t.

Research related to robots and robotic technology in the Computer Society Digital Library:

- The Consciences of Robot Warriors

- Graphical Instruction for Home Robots

- RoboJockey: Designing an Entertainment Experience with Robots

- Robust Tracking of Soccer Robots Using Random Finite Sets

- A Natural Interface for Remote Operation of Underwater Robots

- Robots in Retirement Homes: Person Search and Task Planning for a Group of Residents by a Team of Assistive Robots

- From Giant Robots to Mobile Money Platforms: The Rise of ICT Services in Developing Countries