Imagine playing a video game without a hand-held device.

While not widespread yet, “bare-hand” interface technology has been making strides for years, resulting in numerous robust systems with a variety of uses—slide presentations, teleconferences, robot interaction, and, of course, gaming.

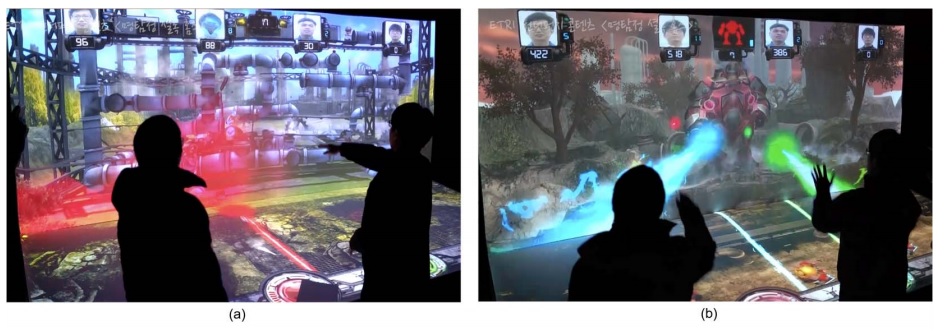

Now, a group of researchers from Electronics and Telecommunications Research Institute (ETRI) have taken the tech a step further by developing a new bare-hand gesture interface for large screens that uses superior algorithms to detect the upper body movements of the players. It’s called ThunderPunch.

Like what you’re reading? Stay ahead of your field and sign up here for our Build Your Career or Computing Edge newsletters to get content like this delivered right to your inbox weekly.

Unlike the conventional method, which involves positioning the camera in front, the cameras in ThunderPunch are mounted on the ceiling so that they avoid covering the large screen.

ThunderPunch also uses real-time algorithms that detect multiple body poses and recognize punching and touching gestures from top-view depth images.

To show the system in action, the team created a game that used the improved features. Later tests show that the proposed algorithm outperforms other algorithms.

Read more in “ThunderPunch: A bare-hand, gesture-based, large interactive display interface with upper-body-part detection in a top view.”